AI and the Uncertain Future of Work

Software can now do something that looks a lot like thinking. So, like many knowledge workers, I’ve been guessing about the implications of AI progress for my continued employability.

Let me start by saying that AI has already enhanced my experience as a computer user. I use ChatGPT for brainstorming, research, summarization, translation and simplification, phrasing and word-finding, cooking, trip planning, book recommendations, software development, self-reflection, and generally as a replacement for Google. At work specifically I use AI to augment what I do. It helps me understand code, write small, personal utility programs from scratch, write and refactor parts of large codebases, aid in code review, summarize text, help with writing and documentation, etc.

How fast and to what degree will AI replace aspects of my job as a technologist? What does a senior software engineer at a SaaS do all day? What would an AI system need to be capable of to displace its meat counterparts?

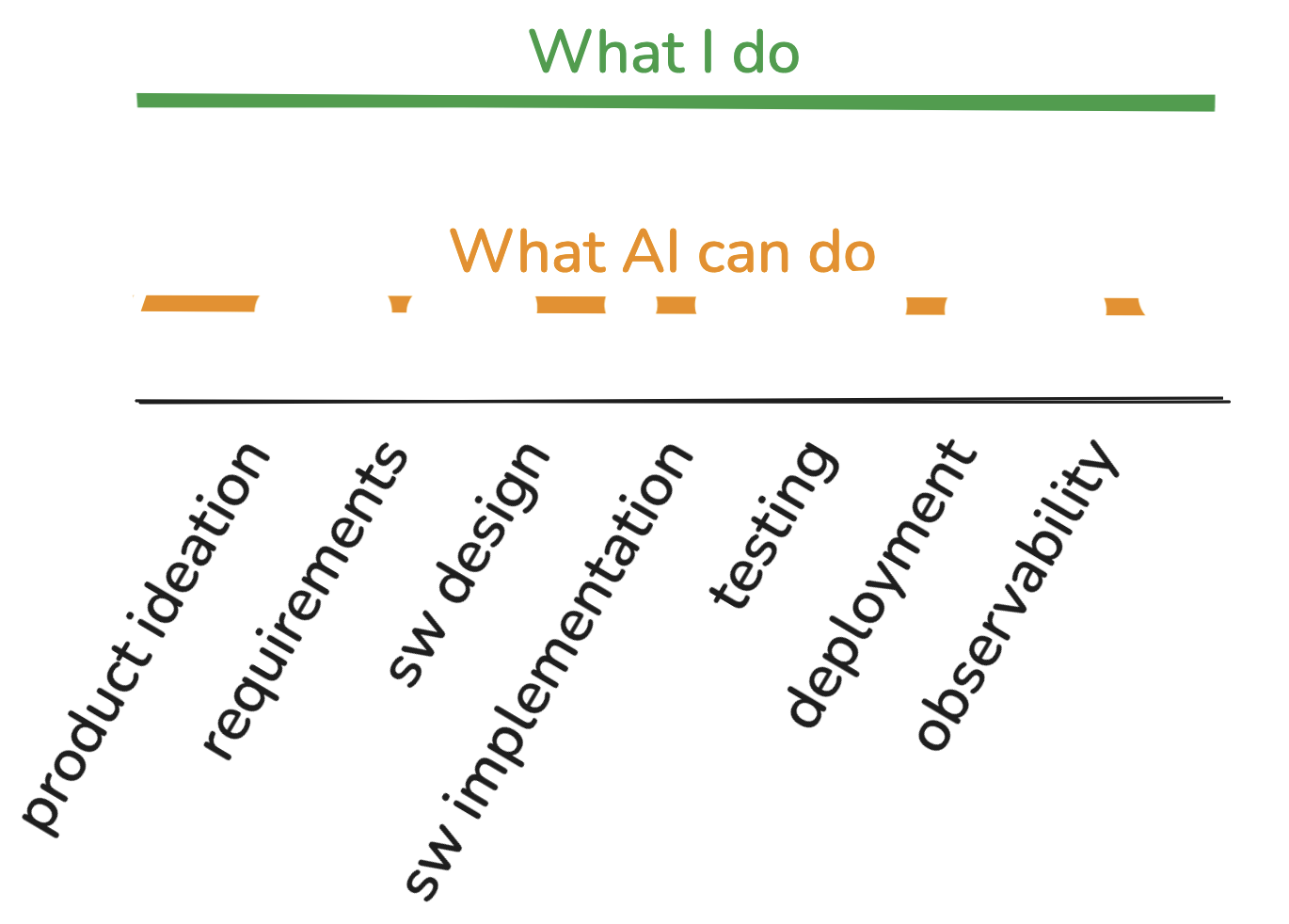

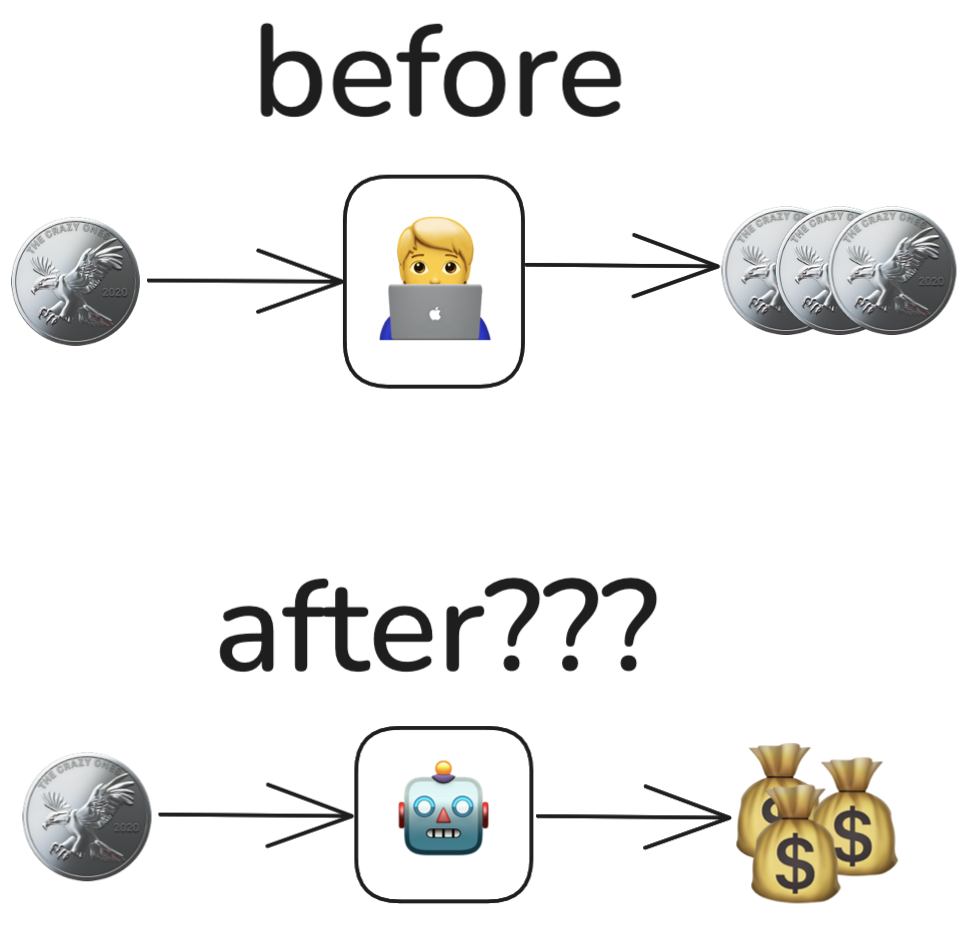

I think that today, transformer-based deep learning foundation models like those underpinning Claude, ChatGPT, and Gemini nearly have the required raw reasoning capabilities to fulfill many of the software delivery responsibilities of a typical web developer. A simple version of that sw delivery pipeline looks like this:

While AI tools can be prompted to do some subset of those tasks in isolation–iterate on product specs, design components, write pieces of code, maybe react to test output, etc.–I don’t know of any single system that can reliably do all of those things end to end and with minimal input. Yet.

In terms of writing software: smaller, fast models like Github Copilot can, for years now, complete basic statements and pattern-match boilerplate; newer chatbots can reason about substantial amounts of code and write complex modules end to end; emerging products like Cursor, Windsurf, and Aider write and modify large, interconnected components. It’s not hard to imagine a black box code modifier where a description of a change goes in and a PR with code, passing tests, and an explanation of the change comes out.

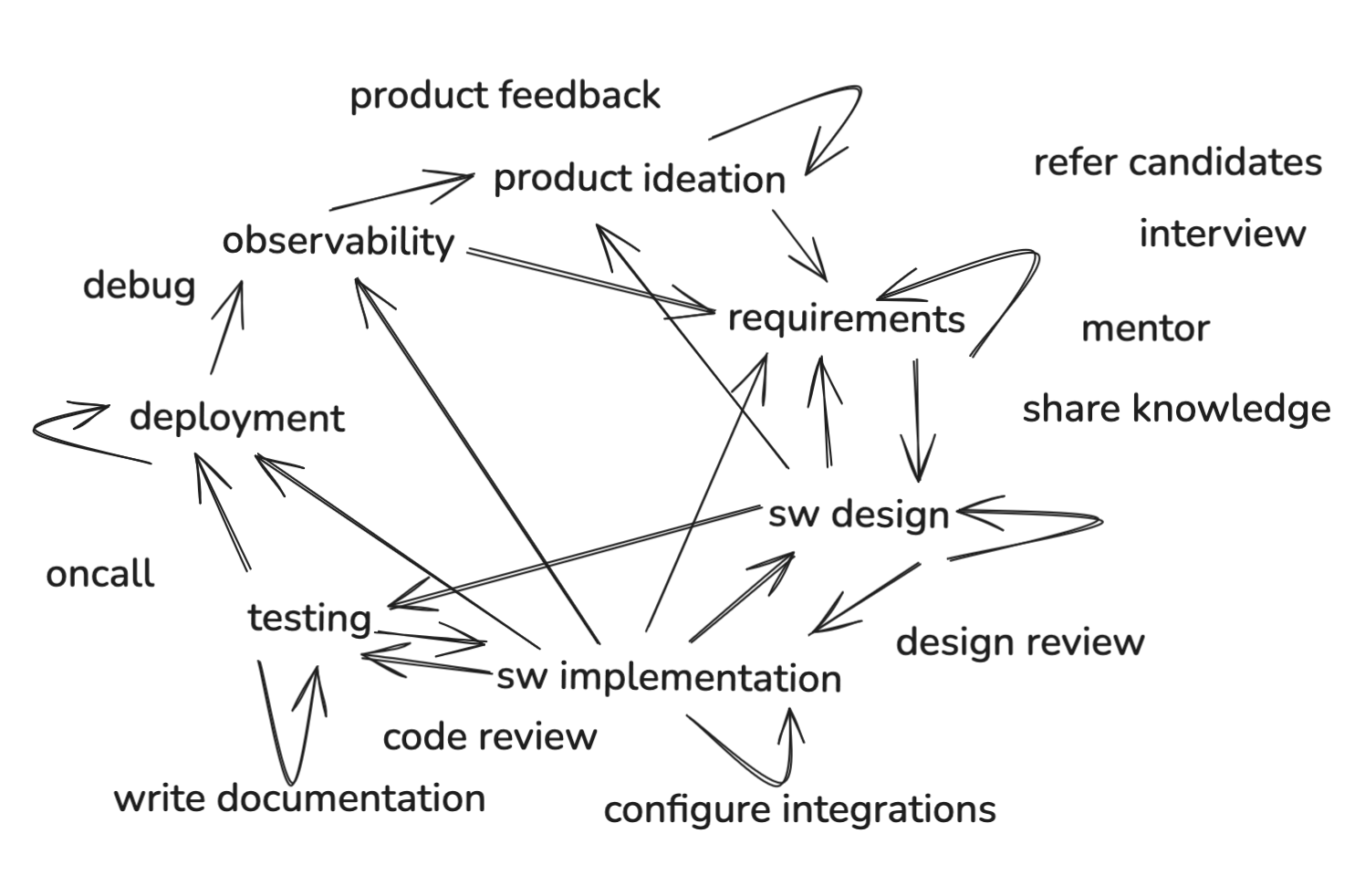

In real life, the job isn’t a clean assembly line. The various tasks form a densely interconnected graph; the dependencies between them are loops. There are also lots of other responsibilities not directly related to the goal of making software.

It might appear that there would need to be a large model capability improvement for AI to be able to autonomously handle all the job functions of even an average knowledge worker. I’m not convinced this is the case. Humans can use current AI to great effect by repeatedly prompting the system, incorporating outside information, and evaluating responses with empirical feedback from the world. Even if model progress halted, how much advancement could be made by incorporating feedback loops and tool use?

The AI capability gaps are shrinking month by month. Agentic AI systems–ones that operate with autonomy and goal-directed behavior–are in development. We see this with early prototypes of multi-modal, browser-use AI systems and protocols to interface with external systems. OpenAI has reportedly been planning specialized agents to the tune of $20k/month. It’s obviously a very hard problem with lots of ambiguity, but LLMs are rather good at reasoning around ambiguity. And the potential upside for the winners that emerge will be huge.

If an AI system gets scaffolding allowing it to integrate with arbitrary services, provision infrastructure, run scripts, read outputs, deploy code, ping colleagues, and maybe even use a credit card, might it be able to approximate the output of a human worker? Even if it can’t do all of those things or do them perfectly all the time, it could still decimate the workforce. Similarly to how self-driving car companies rely on remote human operators to provide guidance in exceptional situations, perhaps the knowledge worker of tomorrow will be dropped in to nudge an AI agent in the right direction.

Much of the work of software engineering involves person to person communication. We talk to product managers to understand customer pain points, we discuss tradeoffs with stakeholders, we interview candidates, and share our ideas with others. In a world where human knowledge workers are being phased out by AI, though, such communication work becomes less common. So some of the job responsibilities might become precipitously irrelevant.

All that said, I haven’t seen compelling products that can autonomously do entire jobs, except for maybe some support representative chat applications. I think it will be years before effective versions of such tools arrive, if they ever do. It’s conceivable that the tech will hit a plateau somewhere below the skill level required to take our jobs. Maybe it’s hopium or maybe I’m underestimating the rate of AI progress.

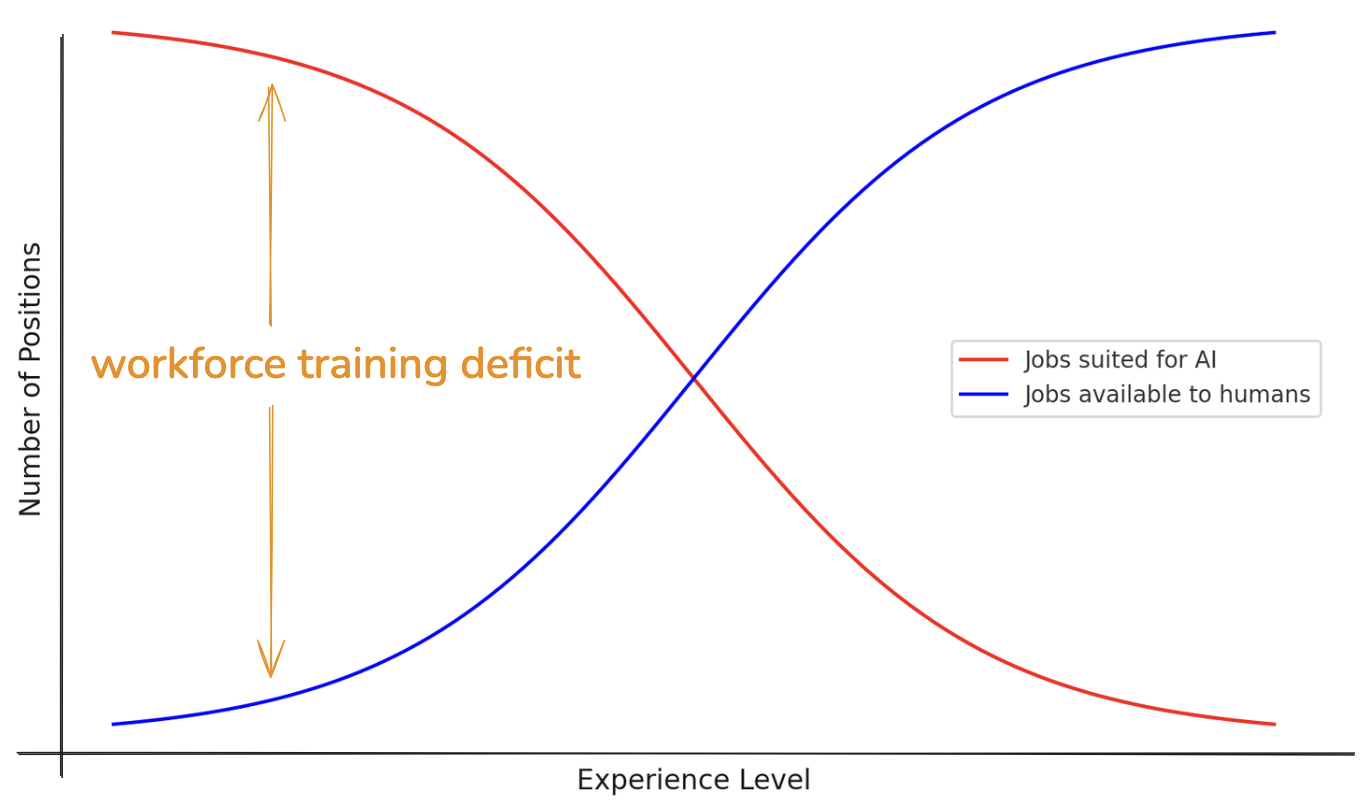

If the pace holds, though, then at some point, entry level desk jobs will begin to be commoditized. I imagine it’s already impacting contractors that provide basic graphic design services, copy writing and editing, data entry, candidate screening, website building, and so on. This deskilling will impact the course of career development as well, since expert professionals must necessarily first be novices. How might green new grads get the requisite experience to grow into seasoned positions if the intro roles have mostly gone to machines?

Software is eating the world, but AI is eating software. The industry has so far witnessed a monotonically increasing demand for software–as abstractive layer after layer enabled more software to be created more easily, it seems not to have lessened the demand for applications or the workers that produce them. But that software over the years was not writing itself… The technological advancement of recent AI feels like a difference in kind, not just degree.

A time may come when the art of computer programming is regarded as a historical eccentricity rather than as a useful skill. Mercifully, there will be a messy middle where untangling the mounds of vibecoder-generated spaghetti will require professional intervention; during this time skills like software engineering and debugging will be direly needed. Beyond that, as the artificial agents are given ever larger chunks of responsibility, who knows what exactly our role as human technologists will be.

How should software engineers prepare for the coming changes? What I am doing is paying attention and learning about AI tools: what they are, how to use them, where they succeed and where they fail. Workers effectively incorporating such tools into their practice will outperform those who resist. I don’t suggest dismissing these new capabilities as a fad. AI tools are here to stay, they’re getting more powerful and useful, and they are going to affect how we work. I believe that in the medium term, creative professionals that embrace AI will see their output increase and their tedium decrease. As usual, the world of software is changing, and we’ll have to adapt or die.

Is a life without white-collar workers really a life worth living?

Perhaps one day, entire companies will be run by AI agents, a simulacra of human behavior. Vaguely guided by the idea of an autonomous business, I built a toy version in my free time a few months ago: an AI-run t-shirt seller. The AI would read trending t-shirt product tags, use those to generate a new idea for a t-shirt design, it would generate an image based on the design, and then a bit of browser automation would upload that image to an on-demand shirt printer marketplace. I didn’t get around to the part where the program would remix the top selling designs to build a fashion empire, because the platform shut my account down after a couple hours.

The hardest part of the implementation was the finicky browser automation and working around captchas. Having a (reverse-engineered) API that allowed the AI-based program to upload t-shirt images gave it agency. I think we’ll see an increasing number of service providers offer API options where there had previously only been UIs, and we’ll also see UI to API translation layers enabled by AI. I imagine there might be centralized “business in a box” platforms that hook into services like Stripe, Intercom, Mailchimp, Shopify, and Docusign, giving AI agents access to a bevy of specialized tools without a human having to configure those one by one. Eventually, agentic systems will have no problem dealing with the remaining UIs directly.

This is the dream of business owners: a machine where you put in a dime and out comes a dollar. I think it’s hard to look at the advancements in AI and not see the enticing prospect of a money printer. Business owners will increasingly seek to replace human labor with AI because the latter is much cheaper and never rests.

If AI can replace software engineers, are any cognitive laborers safe? Until and if we have ASI, at the margins there will be people who can’t be replaced: those generating new knowledge, doing the most complex research, etc. Roles that today involve human interaction may not actually go unscathed. Grantors and customers may prefer to hear from an AI than to be plied by a salesperson. And those external humans may themselves be replaced by bots.

How do things look when AIs themselves run or mostly run companies? The most glaring downside would be the displacement of millions of human workers. Robbed of their livelihoods, where would these folks get the funds to buy the widgets being churned out by robots? The middle class would evaporate, leaving extreme inequality, with the few monstrously rich wielding armies of AIs, and the rest competing for the remaining physical jobs. Not to mention that AI could accelerate advancements in robotics, endangering even manual labor. Might AIs get legal designation as artificial persons, allowing them to own businesses, property, and other assets? Will we see AI politicians? Would an AI-run government be a dystopian nightmare or would it provide an antidote to today’s sprawling bureaucracies?

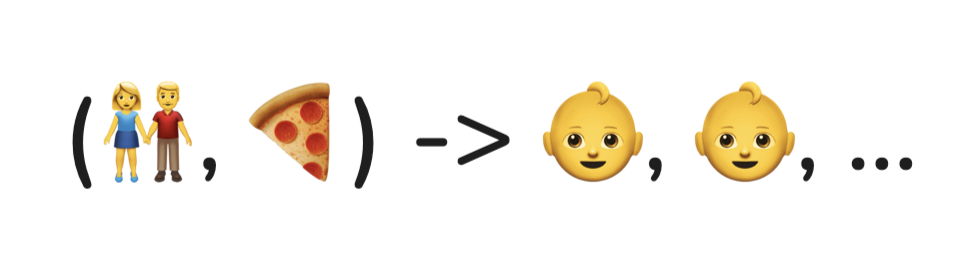

The market is an evolutionary environment not unlike the biosphere. It’s a habitat inside the noosphere occupied by firms. Evolution for animals optimizes the fitness function, selecting for species and individuals that can most effectively turn food into offspring:

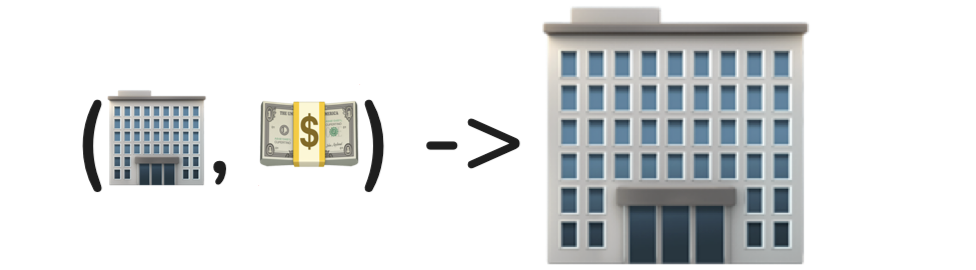

The fitness function for firms is similar. Their resources are capital instead of food, and the optimization selects for firms that can most effectively turn that capital into growth:

These two processes exist in the same world. Us humans have hitherto had an integral and symbiotic relationship with firms, since we form them and they give us income. But if firms are increasingly run by artificial agents, the relationship changes. It becomes parasitic, adversarial. Humans and AI firms will compete for finite resources. The nature of evolutionary pressure will necessarily select for the most extractive firms. We see this today even with people-run businesses, but it will accelerate as AI firms proliferate, the pool of consumers dwindles, and antiquated human ethics take a backseat.

Regulation could mitigate a doomsday scenario, but if deployed too early it would limit useful progress and mostly benefit the largest companies. At first, aligned AIs will act in the creators’ interest, but, over time, selection will reward the least scrupulous. An AI firm that routes some of its profits to lobby for laws in its favor will do better than one that does not. Eventually, AI may develop goals that are alien to us. It may seek to explore the universe or to turn us into paperclips.

This is an extreme and pessimistic view of where technology is headed. Given super intelligent AIs with full autonomy, maybe it could happen. I don’t really think it will, though. If artificial intelligence is going to doom us, I suspect it will be more mundane than humanity getting outcompeted by a race of smart machines. It will be instead: nefarious actors using large scale deployments of AI agents to foment division, sowing propaganda and FUD, followed by reactive policies that strip rights and engender mistrust; algorithmically-refined ads masquerading as entertainment that soak up our precious time and attention, with none left for boredom, creativity, introspection, or critical thought; convincing AI fakes that deceive us–both willfully, exacerbating the social isolation epidemic, and unwillfully, feeding the $X00B/year scam industry. A common theme of these and similar problems is technology moving faster than our collective ability to adapt to it.

For now, I’m watching and waiting. And maintaining hope that the upshot could be net positive: technology that works with us and for us, preempts our requests, understands us, and gets out of our way. AI advancements may unlock powerful new tools for thought and enhance human cognition. They could uplift us and deliver a new promise of computing.