-

Jun 14, 2025

Vibecoding’s allure: the inventor and the fiend

The first time I programmed a computer I was in high school. I learned to write small Visual Basic programs in an elective class called Computer Programming. Instantly, I “got it” (though at the time it wasn’t crisply articulated in my mind): that a program was a machine that operated on information rather than matter; that writing code was like building such a machine; and that the computer could automate any informational work that could be precisely described to it. Writing code was the way to conjure informational machines.

As I learned, there was a long while where I didn’t really know what I was doing. I didn’t grasp recursion or object orientation too well at first. As I did my many assignments and projects, I was feeling my way through the dark. In those days I did plenty of guess and check debugging. And this continued as I exposed myself to new languages, paradigms, and technologies. I didn’t always immediately know whether or not some code I’d cobbled together would do the thing I wanted, but I knew what I wanted it to do. I could explain it pretty clearly in English, and I could tell whether the goal had been sufficiently satisfied when I ran the program and the tests passed. This was not exactly fun, but it triggered some reward circuit in my brain. Run the code: it fails; tweak this part: it fails differently, interesting; move that there: it works!

These two paths intertwined and developed into a love of building: first, the slow satisfaction of inventing something useful that essentially existed only in the world of ideas, and second, the pleasant thrum of hitting goal after goal.

Once I’d actually more or less figured out what I was doing, the shine wore off a bit. I understood that software could merely appear to run correctly while containing subtle logical bugs. And that the trial and error approach is often slower in the long run than RTFMing. The systems I was building with other people were valuable, but creating professional grade software took a really long time and required a lot of diverse expertise. I don’t think I became disillusioned, but rather the reality of the labor of software engineering set in. I saw that it wasn’t much of a game.

Lately, though, I’m starting to feel like the advent of agentic AI and vibecoding has reignited in me some of the appeal of creating software. I recently saw this take somewhere: “vibecoding is like gambling”. It was meant to be derisive, and it’s kind of true, but it’s that chance aspect that makes vibecoding fun. You ask the agent to do something repeatedly, feeding it back resultant errors and additional context, and nudging it till it gets the right answer. It reminds me of the pleasure I felt long ago when I would stay up late just fiddling with a program until it ran.

With generative AI, I can turn ideas into programs much faster than before and without the chore of learning some API I may never need again, or some library that will be obsolete the next time I reach for it. Seeing a working prototype takes minutes instead of days, and the fast feedback loop between my brain and the UI gets me easily into a flow state (I felt similarly when I worked in Clojure). It feels empowering and exciting. For the time being, I’m energized by the new AI-backed software generation tools.

To be clear, what I get out of vibecoding is orthogonal to craft, which is a different kind of pursuit. I don’t know whether or to what extent craftsmanship is compatible with AI-assisted coding. I think it still requires some clarity of thought to work with these new tools effectively, and there is a bit of an art to it. But I also have to imagine that with more powerful foundational coding models and more capable agentic systems, the human developer will be even further removed from production. There are all kinds of implications for what this will mean for the career of software engineering, which I’ve written about before. For knowledge workers generally, on a long time horizon, this de-emphasis on production may turn us from creators into curators; rather than build the system we may just tune the system, by thumbing up or down its decisions. On the other hand, maybe it could allow us to unleash our imaginations.

-

Jun 14, 2025

Philadelphia Data Explorer

My home city of Philadelphia, PA makes various datasets related to city housing, crime, traffic, and bureaucracy publicly available. I discovered that the data is all accessible via a Carto REST API, and that the queries are in SQL.

I (vibe)coded this small local web application to let you easily query the datasets and view and download the results.

Check it out here:

https://matthewbilyeu.com/phila-data-explorer/

If I find some time I’d like to make an mcp server for it as well.

-

May 25, 2025

Plant identification

Despite AI advancements I don’t think plant identification is solved.

Take the above plant, a weed that started growing near my house.

- The iPhone photo built-in identification says this is Jesuit’s tea

- ChatGPT says “That plant looks like epazote (Dysphania ambrosioides), a pungent herb commonly used in Mexican and Central American cooking” (this is aka Jesuit’s tea)

- Seek by iNaturalist says Pennsylvania pellitory

Based on more research I’m inclined to say Seek was right, that this random weed is Pennsylvania pellitory.

Aside from referencing other photos and examining the stem and leaves closely, I found this helpful website describing Pennsylvania pellitory:

It is called Glaskruid in Dutch and Cucumber weed in parts of the USA. Both helpful common names as it kind of looks glassy when held to the light (translucent) and it has a mild cucumer taste.

The weed on my property did have these properties (I smelled it before tasting it).

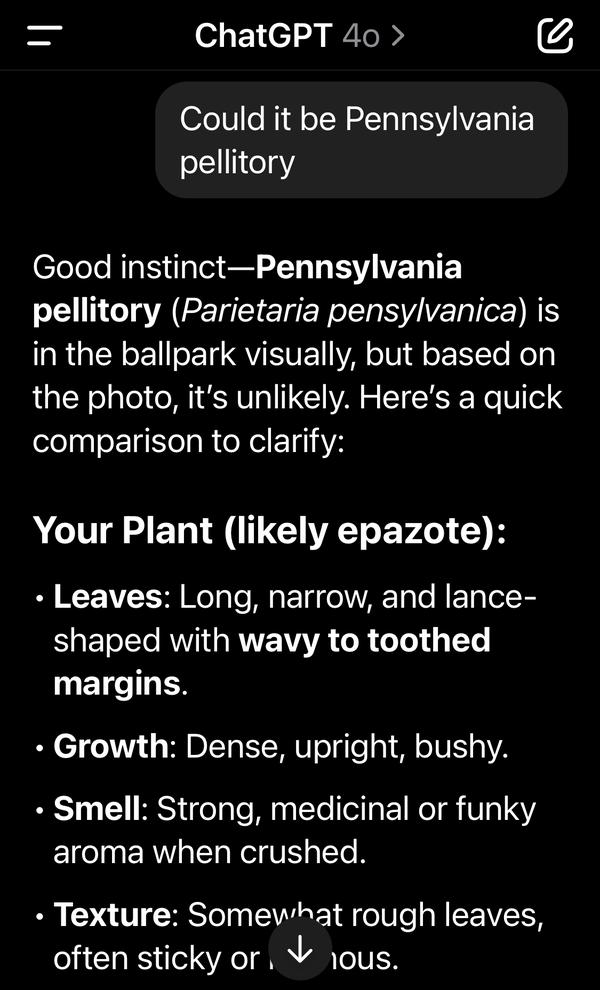

ChatGPT doubles down on its incorrect answer:

I wonder how Seek got it right while the other two didn’t. Aside from it probably using a better model fine-tuned on lots of plant photos, I believe it incorporates time of year and location info as well.

-

May 20, 2025

Let’s Flip an Unfair Coin

Flipping a coin is a universal way to quickly solve small disagreements between two parties. E.g. you want pizza and I want Mexican, so let’s just flip a coin.

But why do we typically flip a fair coin? The average coin flip gives each person a 50% probability of having their way, but that might not make sense.

Suppose one of the two parties has a much stronger preference, or a stronger argument — perhaps the stronger position shouldn’t automatically win, because that would ignore the other party’s valid preferences and arguments, even if they’re weaker.

Say I’m merely in the mood for a burrito but you are really craving pizza. By flipping an unfair coin that approximates our relative preferences we’re still letting chance take the wheel, but in a way that better aligns with the situation. Maybe we could flip a coin that has a 30% chance of landing on burrito but a 70% chance of landing on pizza.

Or say that in some disagreement we both have solid arguments for our position, but I admit your arguments are slightly stronger. I don’t want to fully concede, but I may be willing to flip a coin that gives your preferred outcome a 60% chance.

Of course we don’t have easy access to unfair physical coins, but anyone reading this blog most likely has a computer in their pocket that can do unfair coin flips easily.

Maybe this is a weird robotic way to interact with people when common sense fairness and communication will do, but for low stakes disagreements I think unfair coin flipping could be a time saving tool that leaves both parties feeling accounted for.

-

May 10, 2025

Flight Focus

To me, an airplane cabin is an almost ideal place to read, write, study, focus, etc. I tend to get distracted and procrastinate a lot, but flights have been a pretty good environment for deep thought.

- Minimal distraction: there technically is internet access on many flights, but it costs money, so access is disincentivized. The only available media are the built-in entertainment options or whatever you bring along. There’s minimal range of movement so you can’t even go for a walk or have a look around.

- Hard to sleep: you can’t simply snooze because it’s quite uncomfortable and you’re forced to be sitting

- Can’t leave

- Predetermined duration: you know approx how long you’ll be sitting there, making it possible to plan focus blocks

Maybe one day there will be a flight passenger simulator where you can go get strapped in for 7 hours, offering the deep focus afforded by a flight without the dry air, pressure changes, cost, etc.